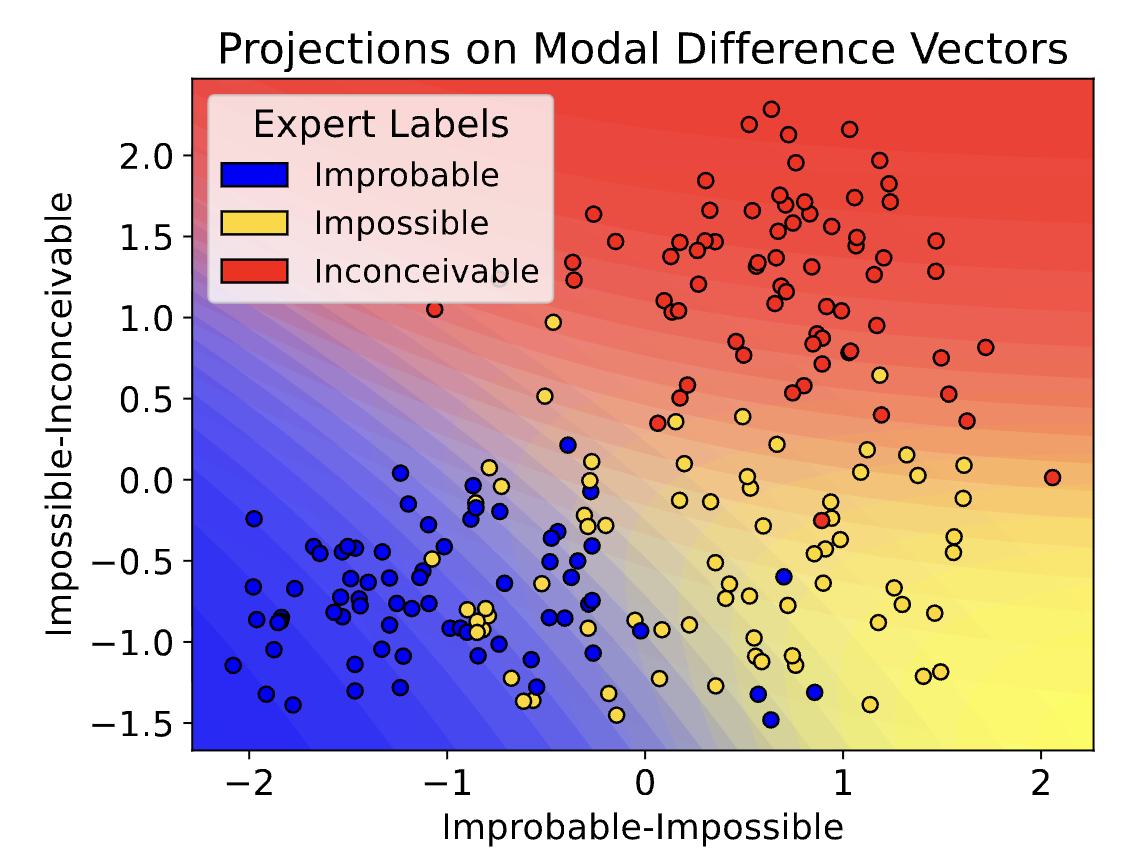

Is This Just Fantasy? Language Model Representations Reflect Human Judgments of Event Plausibility

Can language models distinguish the possible from the impossible?

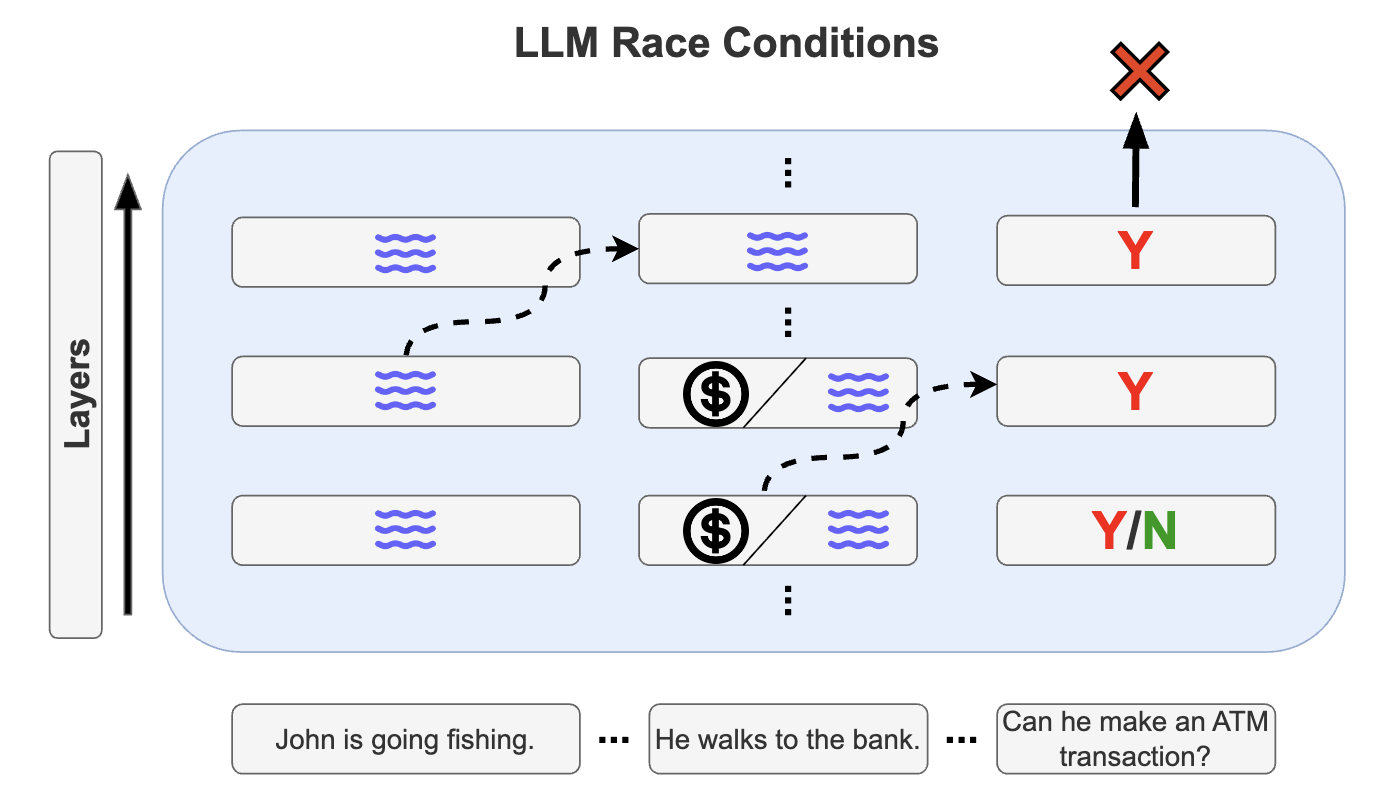

Racing Thoughts: Explaining Contextualization Errors in Large Language Models

Language models are usually great at incorporating context — but not always. What causes contextualization errors?

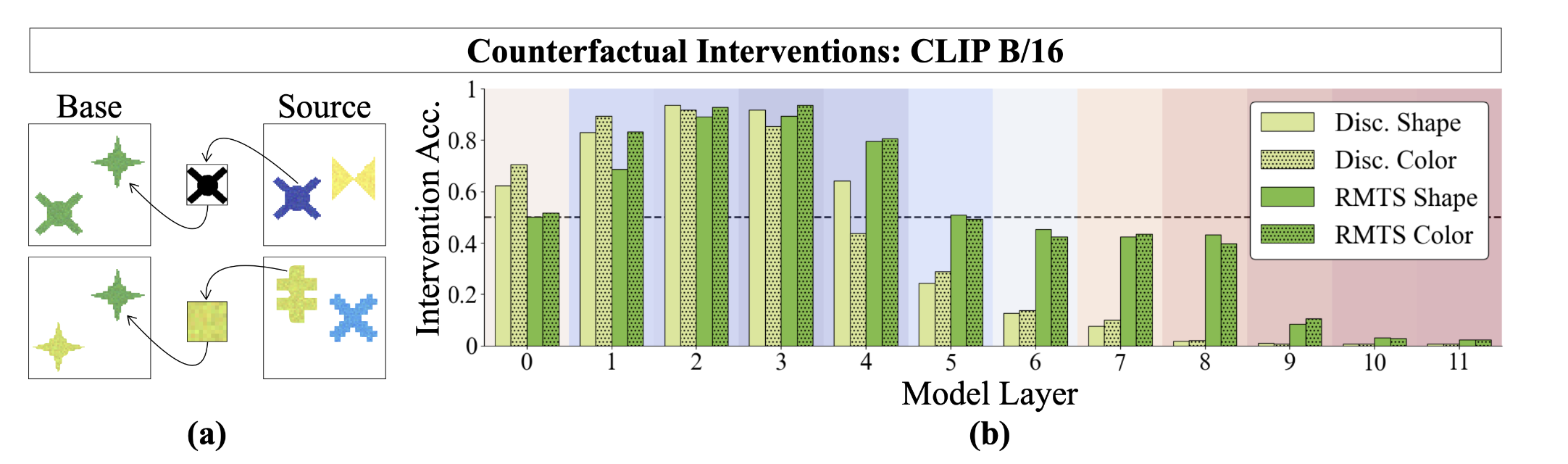

Beyond the Doors of Perception: Vision Transformers Represent Relations Between Objects

How do vision transformers solve a simple symbolic visual reasoning task?

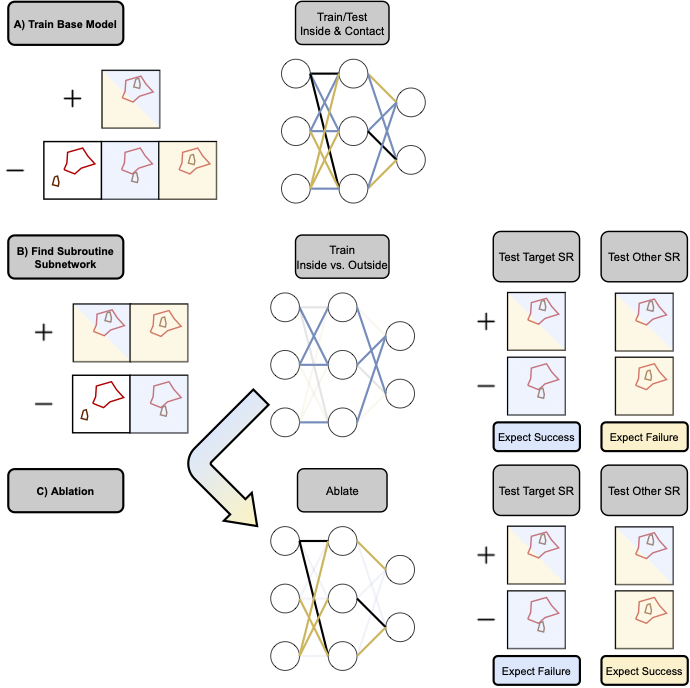

Break It Down: Evidence for Structural Compositionality in Neural Networks

Do neural networks self-organize into modular components when solving compositional tasks?